Load Balancing

Load Balancing: What is it and How Can it Help You?

With the rise of cloud computing, IT departments must shift their focus to Service-Oriented Architecture (SOA) and Software-as-a-Service (SaaS) models. To meet these new demands, many IT departments are looking to virtualization to reduce operating costs while increasing uptime and flexibility. In this post, we will learn more about load balancing and how it can help optimize your systems.

What is Load Balancing?

Load balancing is the process of distributing a workload across multiple servers or resources. The goal behind load balancing is to optimize resource usage while improving performance. This is commonly used to distribute network traffic and database or application requests across multiple servers. In the business world, load balancing can be extremely helpful in managing peak traffic as well as ensuring that systems remain responsive regardless of the number of users.

For example, suppose you have a database that receives an extremely high number of requests, more than it can typically handle. In this case, load balancing can distribute that load onto other databases in order to spread the work across a larger group of sources.

By using load balancing, it helps to prevent overworking servers. It also avoids:

- Slowdowns

- Dropped requests

- Server failures

How does Load Balancing work?

Load balancing can be performed:

- By physical servers: hardware load balancers

- By virtualized servers: software load balancers

- As a cloud service: Load Balancer as a Service (LBaaS), such as AWS Elastic

An Application Delivery Controller (ADC) with load balancing capabilities can also perform load balancing, as can specialized load balancers.

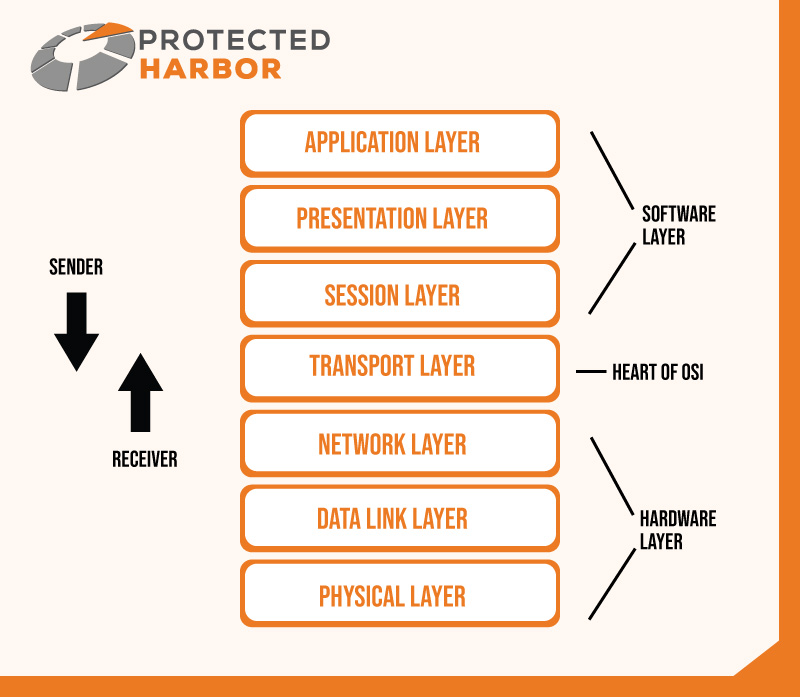

The Open Systems Interconnection (OSI) model’s Layer 4 or Layer 7 are where load balancers direct traffic. They promote their address as the service or website’s destination IP address. Incoming client requests are received by balancers, who then choose which servers will handle each request:

- Balancers at Layer 4 (L4 OSI Transport layer) do not examine the contents of individual packets. They employ Network Address Translation (NAT) to route requests and responses between the chosen server and the client. They rely their routing decisions on the port and IP addresses of the incoming packets.

- Traffic is routed by Layer 7 (L7 OSI Application layer) balancers at the application level. They go through each bundle of incoming content and inspect it. In contrast to an L4 balancer, L7 balancers use diverse criteria to direct client requests to particular servers, including HTTP headers, SSL session IDs, and content categories (text, graphics, video, etc.).

An L4 server requires less processing power than an L7 balancer. Because they determine their route on context-based characteristics, they may be more effective.

- Additionally offered is Global Server Load Balancing (GSLB). GSLBs can direct traffic between servers spread out geographically and housed in on-premise data centers, public clouds, or private clouds. GSLBs are typically set up to route client requests to the geographical server that is nearest to them or to the servers with the fastest response times.

What are the Benefits of Load Balancing?

What are the Benefits of Load Balancing?

There are numerous benefits to load balancing, including:

- Efficiency: To avoid a server overload, load balancers spread requests across the WAN (Wide Area Network) and the internet. By having multiple servers to handle numerous requests concurrently, they also lengthen the response time.

- Flexibility: As needed, servers can be added to and withdrawn from server groups. Processing can be interrupted for maintenance or upgrades on a single server.

- High Availability: Only active servers are sent traffic via load balancers. Other servers can still process requests even if one fails. Numerous massive commercial sites like Amazon, Google, and Facebook have thousands of load balancing and related app servers deployed across the globe. Small businesses can also use load balancers to guide traffic to backup servers.

- Redundancy: Processing will continue even in the event of a server failure, thanks to many servers.

- Scalability: When demand rises, additional servers can be deployed automatically to a server group without interrupting services. Servers can also be removed from the group without impacting service after high-volume traffic events are over.

GSLB offers several further advantages over conventional load balancing configurations, including:

- Disaster Recovery: Other load balancers at various centers worldwide can pick up the traffic.

- Compliance: If a local data center outage occurs. Configuring a load balancer to comply with local legal standards is possible.

- Performance: Network latency can be reduced by closest server routing.

Common Load Balancing Algorithms

Load balancers use algorithms to choose where to send client requests. Several of the more popular load-balancing algorithms are as follows:

Least Connection Method: The servers with the fewest active connections are sent to clients.

Less Bandwidth Approach: According to which server is handling the least amount of bandwidth-intensive traffic, clients are directed to that server.

Least Time for Response: Server routing takes place based on each server’s generated quickest response time. The least response time is occasionally combined with the least connection method to establish a two-tiered balancing system.

Hashing Techniques: Establishing connections between particular clients and servers using client network packets’ data, such as the user’s IP address or another form of identification.

Round Robin: A rotation list is used to connect clients to the servers in a server group. The first client connects to server 1, the second to server 2, and so forth before returning to server 1 when the list is complete.

Load Balancing Scenarios

The methods described here can implement load balancing in various situations. Several of the most typical use cases for load balancing include:

- App servicing: Enhancing general web, mobile, and on-premises performance.

- Network Load Balancing: Evenly distributing requests too frequently accessed internal resources, like email servers, file servers, video servers, and for business continuity, which are not cloud-based.

- Network Adapters:Using separate network adapters to service the same servers using load balancing techniques.

- Database Balancing:Distributing data requests among servers to improve responsiveness, integrity, and reliability.

A fundamental networking linear function of load balancing can be applied everywhere to evenly distribute workloads among various computing resources. It is an essential part of every network.

Key Takeaway

Load Balancing is a crucial component of distributed and scalable deployments on either public or private cloud. Each cloud vendor offers a variety of load balancing solutions that combine the rules above. Some of the most well-known: Azure, AWS, and GCP, offer load balancing services.

High-traffic websites choose Protected Harbor’s load-balancing services because they are the finest in their field. Our software-based load balancing is far less expensive compared to the hardware-based solutions with comparable features.

Thanks to comprehensive load balancing capabilities, you may create a network for delivering well-efficient applications.

Your website’s effectiveness, performance, and dependability are all improved when our technology is used as a load balancer in front of your farm of applications and web servers. You can enhance client happiness and the return on your IT investments with the support of Protected Harbor.

We are currently offering free IT audits for a limited time, so contact us today.