What is a Data Center Architecture and how to design one?

What is a Data Center Architecture, and how to design one?

As businesses scale and data demands increase, the structure and strategy behind your data center architecture become more critical than ever. Traditional data centers—characterized by rows of servers housed in physical racks—were complex to manage, costly to maintain, and prone to inefficiencies. These systems required constant attention, from patching and updates to power management and cooling—leading to growing investments in data center infrastructure and optimization efforts.

Today, modern organizations are shifting towards more agile and scalable solutions. Enter cloud data center architecture, modular data center design, and edge data center design—innovative models that are transforming the way data is processed and delivered. These modern approaches focus on flexibility, energy efficiency, and proximity to the user, improving performance and reducing latency.

This blog will guide you through the essentials of data center layout and design, exploring how virtualization and cloud integration reshape traditional data center planning. Whether you’re building from scratch or optimizing an existing setup, understanding how to balance physical infrastructure with cloud scalability is key. By leveraging modular and edge designs, businesses can create a resilient and future-ready data center that meets evolving demands.

Types of Data Center Architecture

There are four primary types of data center architecture, each tailored to different needs: super spine mesh, mesh point of delivery (PoD), three-tier or multi-tier model, and meshwork.

- Mesh Network System: The mesh network system facilitates data exchange among interconnected switches, forming a network fabric. It’s a cost-effective option with distributed designs, ideal for cloud services due to predictable capacity and reduced latency.

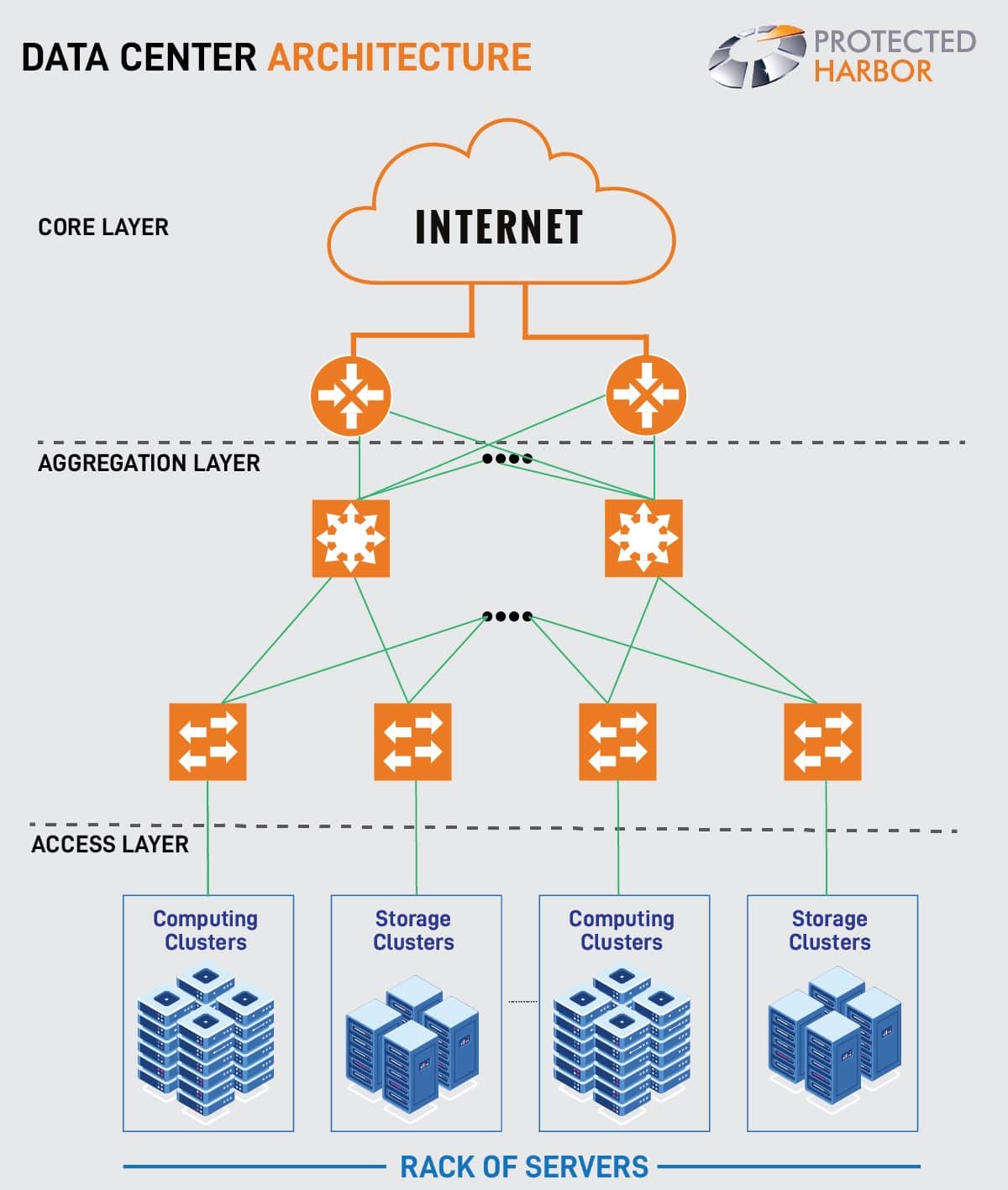

- Three-Tier or Multi-Tier Model: This architecture features core, aggregation, and access layers, facilitating packet movement, integration of service modules, and connection to server resources. It’s widely used in enterprise data centers for its scalability and versatility.

- Mesh Point of Delivery: The PoD design comprises leaf switches interconnected within PoDs, promoting modularity and scalability. It efficiently connects multiple PoDs and super-spine tiers, enhancing data flow for cloud applications.

- Super Spine Mesh: Popular in hyperscale data centers, the super spine mesh includes an additional super spine layer to accommodate more spine switches. This enhances resilience and performance, making it suitable for handling massive data volumes.

Fundamentals of a Data Center Architecture

What is a data center architecture?

In simple terms, it describes how computer resources (CPUs, storage, networking, and software) are organized or arranged in a data center. As you may expect, there are almost infinite architectures. The only constraint is the number of resources a company can afford to include. Still, we usually don’t discuss data center network architecture in terms of their various permutations but rather in terms of their essential functionality.

A data center is a physical facility where data and computing equipment are stored, enabling central processing, storage, and exchange of data. Modern data center architecture involves planning how switches and servers will connect, typically during the planning and construction phases. This blueprint guides the design and construction of the building, specifying the placement of servers, storage, networking, racks, and resources. It outlines the data center networking architecture, detailing how these components will connect. Additionally, it encompasses the data center security architecture, ensuring secure operations and safeguarding data. Overall, it provides a comprehensive framework for efficient data center operations.

Today’s data centers are becoming much larger and more complex. Because of their size, the hardware requirements vary from workload to workload and even day to day. In addition, some workloads may require more memory capacity or faster processing speed than others so data center optimization becomes necessary.

In such cases, leveraging high-end devices will ensure that the TCO (total cost of ownership) is lower. But because the management and operations staff are so large, this strategy can be costly and ineffective. For this reason, it’s important to choose the right architecture for your organization.

While all data centers use virtualized servers, there are other important considerations for designing a data center. The building’s design must take into account the facilities and premises. The choice of technologies and interactions between the various hardware and software layers will ultimately affect the data center’s performance and efficiency.

For instance, a data center design may need sophisticated fire suppression systems and a control center where staff can monitor server performance and the physical plant. Additionally, a data center should be designed to provide the highest levels of security and privacy.

How to Design a Data Center Architecture

The question of how to design the architecture of data center has a number of answers. Before implementing any new data center technology, owners should first define the performance parameters and establish a financial model. The design of the architecture must satisfy the performance requirements of the business.

Several considerations are necessary before starting the data center construction. First, the data center premises and facility should be considered. Then, the design should be based on the technology selection. There should be an emphasis on availability. This is often reflected by an operational or Service Level Agreement (SLA). And, of course, the design should be cost-effective.

Another important aspect of data center design is the size of the data center itself. While the number of servers and racks may not be significant, the infrastructure components will require a significant amount of space.

For example, the mechanical and electrical equipment required by a data center will require significant space. Additionally, many organizations will need office space, an equipment yard, and IT equipment staging areas. The design must address these needs before creating a space plan.

When selecting the technology for a data center, the architect should understand the tradeoffs between cost, reliability, and scalability. It should also be flexible enough to allow for the fast deployment and support of new services or applications. Flexibility can provide a competitive advantage in the long run, so careful planning is required. A flexible data center with an advanced architecture that allows for scalability is likely to be more successful.

Considering availability is also essential it should also be secure, which means that it should be able to withstand any attacks and not be vulnerable to malicious attacks.

By using the technologies like ACL (access control list) and IDS (intrusion detection system), the data center architecture should support the business’s mission and the business objectives. The right architecture will not only increase the company’s revenue but will also be more productive.

Data center tiers:

Data centers are rated by tier to indicate expected uptime and dependability:

Tier 1 data centers have a single power and cooling line, as well as few if any, redundancy and backup components. It has a 99.671 percent projected uptime (28.8 hours of downtime annually).

Tier 2 data centers have a single power and cooling channel, as well as some redundant and backup components. It has a 99.741 percent projected uptime (22 hours of downtime annually).

Tier 3 data centers include numerous power and cooling paths, as well as procedures in place to update and maintain them without bringing them offline. It has a 99.982 percent anticipated uptime (1.6 hours of downtime annually).

Tier 4 data centers are designed to be totally fault-tolerant, with redundancy in every component. It has a 99.995 percent predicted uptime (26.3 minutes of downtime annually).

Your service level agreement (SLAs) and other variables will determine which data center tier you require.

In a data center architecture, core infrastructure services should be the priority. The latter should include data storage and network services. Traditional data centers utilize physical components for these functions. In contrast, Platform as a Service (PaaS) does not require a physical component layer.

Nevertheless, both types of technologies need a strong core infrastructure. The latter is the primary concern of most organizations, as it provides the platform for the business. DCaaS and DCIM are also a popular choice among the organizations.

Data Center as a Service (DCaaS) is a hosting service providing physical data center infrastructure and facilities to clients. DCaaS allows clients remote access to the provider’s storage, server and networking resources through a Wide-Area Network (WAN).

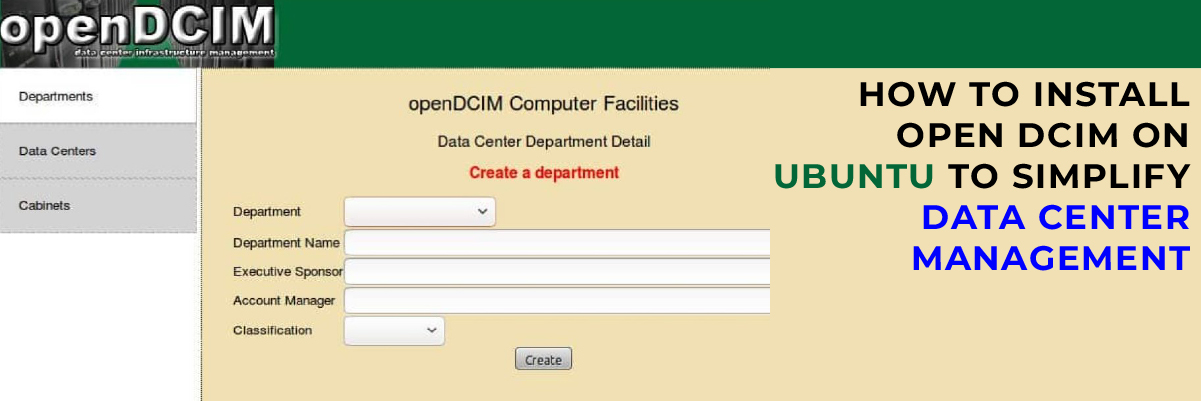

The convergence of IT and building facilities functions inside an enterprise is known as data center infrastructure management (DCIM). A DCIM initiative aims to give managers a comprehensive perspective of a data center’s performance so that energy, equipment, and floor space are all used as efficiently as possible.

Data Center Requirements

To achieve operational efficiency, reliability, and scalability, a data center setup must meet stringent requirements. The following are critical considerations:

1. Reliability and Redundancy– Ensuring high performance and uninterrupted services necessitates robust data center redundancy. This includes having redundant power sources, networking infrastructure, and cooling systems. Data center redundancy is crucial to mitigate the risk of downtime and maintain continuous operations.

2. Scalability– With data volumes growing exponentially, data centers must be scalable to accommodate future growth without compromising performance. Scalable infrastructure allows for seamless expansion and adaptation to increasing demands, ensuring long-term operational effectiveness.

3. Security– Data center security is paramount due to the sensitive information stored within these facilities. To protect data integrity and privacy, stringent security measures such as access controls, continuous monitoring, and encryption are essential. Robust data center security protocols help safeguard against breaches and unauthorized access.

4. Efficiency– Optimizing data center efficiency is essential for reducing operational expenses and minimizing environmental impact. Efficient energy use in data centers lowers costs and promotes sustainability. Implementing energy-efficient technologies and practices enhances overall data center efficiency, contributing to a greener operation.

By focusing on data center security, efficiency, and redundancy, organizations can ensure their data centers are well-equipped to handle current and future demands while maintaining high performance and reliability.

Conclusion

Data centers have seen significant transformations in recent years. Data center infrastructure has transitioned from on-premises servers to virtualized infrastructure that supports workloads across pools of physical infrastructure and multi-cloud environments as enterprise IT demands to continue to migrate toward on-demand services.

Two key questions remain the same regardless of which current design strategy is chosen.

- How do you manage computation, storage, and networks that are differentiated and geographically dispersed?

- How do you go about doing it safely?

Because the expense of running your own data center is too expensive and you receive no assistance, add in the cost of your on-site IT personnel once more. DCaaS and DCIM have grown in popularity.

Most organizations will benefit from DCaaS and DCIM, but keep in mind that with DCaaS, you are responsible for providing your own hardware and stack maintenance. As a result, you may require additional assistance in maintaining those.

You get the team to manage your stacks for you with DCIM. The team is responsible for the system’s overall performance, uptime, and needs, as well as its safety and security. You will receive greater support and peace of mind if you partner with the proper solution providers who understand your business and requirements.

If you’re seeking to create your data center and want to maximize uptime and efficiency, The Protected Harbor data center is a secure, hardened DCIM that offers unmatched uptime and reliability for your applications and data. This facility can operate as the brain of your data center, offering unheard-of data center stability and durability.

In addition to preventing outages, it enables your growth while providing superior security against ransomware and other attacks. For more information on how we can help create your data center while staying protected, contact us today.