Cyber Attacks and Data Breaches in the USA 2024

Data Breaches and Cyber Attacks in the USA 2024

The landscape of cyber threats continues to evolve at an alarming rate, and 2024 has been a particularly challenging year for cybersecurity in the USA. From large-scale data breaches to sophisticated ransomware attacks, organizations across various sectors have been impacted. This blog provides a detailed analysis of these events, highlighting major breaches, monthly trends, and sector-specific vulnerabilities. We delve into the most significant incidents, shedding light on the staggering number of records compromised and the industries most affected. Furthermore, we discuss key strategies for incident response and prevention, emphasizing the importance of robust cybersecurity measures to mitigate these risks.

Top U.S. Data Breach Statistics

The sheer volume of data breaches in 2024 underscores the increasing sophistication and frequency of cyber attacks:

- Total Records Breached: 6,845,908,997

- Publicly Disclosed Incidents: 2,741

Top 10 Data Breaches in the USA

A closer look at the top 10 data breaches in the USA reveals a wide range of sectors affected, emphasizing the pervasive nature of cyber threats:

| # | Organization Name | Sector | Known Number of Records Breached | Month |

| 1 | Discord (via Spy.pet) | IT services and software | 4,186,879,104 | April 2024 |

| 2 | Real Estate Wealth Network | Construction and real estate | 1,523,776,691 | December 2023 |

| 3 | Zenlayer | Telecoms | 384,658,212 | February 2024 |

| 4 | Pure Incubation Ventures | Professional services | 183,754,481 | February 2024 |

| 5 | 916 Google Firebase websites | Multiple | 124,605,664 | March 2024 |

| 6 | Comcast Cable Communications, LLC (Xfinity) | Telecoms | 35,879,455 | December 2023 |

| 7 | VF Corporation | Retail | 35,500,000 | December 2023 |

| 8 | iSharingSoft | IT services and software | >35,000,000 | April 2024 |

| 9 | loanDepot | Finance | 16,924,071 | January 2024 |

| 10 | Trello | IT services and software | 15,115,516 | January 2024 |

Dell

Records Breached: 49 million

In May 2024, Dell suffered a massive cyberattack that put the personal information of 49 million customers at risk. The threat actor, Menelik, disclosed to TechCrunch that he infiltrated Dell’s systems by creating partner accounts within the company’s portal. Once authorized, Menelik initiated brute-force attacks, bombarding the system with over 5,000 requests per minute for nearly three weeks—astonishingly undetected by Dell.

Despite these continuous attempts, Dell remained unaware of the breach until Menelik himself sent multiple emails alerting them to the security vulnerability. Although Dell stated that no financial data was compromised, the cybersecurity breach potentially exposed sensitive customer information, including home addresses and order details. Reports now suggest that data obtained from this breach is being sold on various hacker forums, compromising the security of approximately 49 million customers.

Bank of America

Records Breached: 57,000

In February 2024, Bank of America disclosed a ransomware attack in the United States targeting Mccamish Systems, one of its service providers, affecting over 55,000 customers. According to Forbes, the attack led to unauthorized access to sensitive personal information, including names, addresses, phone numbers, Social Security numbers, account numbers, and credit card details.

The breach was initially detected on November 24 during routine security monitoring, but customers were not informed until February 1, nearly 90 days later—potentially violating federal notification laws. This incident underscores the importance of data encryption and prompt communication in mitigating the impact of such breaches.

Sector Analysis

Most Affected Sectors

The healthcare, finance, and technology sectors faced the brunt of the attacks, each with unique vulnerabilities that cybercriminals exploited:

- Healthcare: Often targeted for sensitive personal data, resulting in significant breaches.

- Finance: Constantly under threat due to the high value of financial information.

- Technology: Continuous innovation leads to new vulnerabilities, making it a frequent target.

Ransomware Effect

Ransomware continued to dominate the cyber threat landscape in 2024, with notable attacks on supply chains causing widespread disruption. These attacks have highlighted the critical need for enhanced security measures and incident response protocols.

Monthly Trends

Analyzing monthly trends from November 2023 to April 2024 provides insights into the evolving nature of cyber threats:

- November 2023: A rise in ransomware attacks, particularly targeting supply chains.

- December 2023: Significant breaches in the real estate and retail sectors.

- January 2024: Finance and IT services sectors hit by large-scale data breaches.

- February 2024: Telecoms and professional services targeted with massive data leaks.

- March 2024: Multiple sectors affected, with a notable breach involving Google Firebase websites.

- April 2024: IT services and software sectors faced significant breaches, with Discord’s incident being the largest.

Incident Response

Key Steps for Effective Incident Management

- Prevention: Implementing robust cybersecurity measures, including regular updates and employee training.

- Detection: Utilizing advanced monitoring tools to identify potential threats early.

- Response: Developing a comprehensive incident response plan and conducting regular drills to ensure preparedness.

- Digital Forensics: Engaging experts to analyze breaches, understand their scope, and prevent future incidents.

The report underscores the importance of robust cybersecurity measures and continuous vigilance in mitigating cyber risks. As cyber threats continue to evolve, organizations must prioritize cybersecurity to protect sensitive data and maintain trust.

Solutions to Fight Data Breaches

Breach reports are endless, showing that even top companies with the best cybersecurity measures can fall prey to cyber-attacks. Every company, and their customers, is at risk.

Securing sensitive data at rest and in transit can make data useless to hackers during a breach. Using point-to-point encryption (P2PE) and tokenization, companies can devalue data, protecting their brand and customers.

Protected Harbor developed a robust data security platform to secure online consumer information upon entry, transit, and storage. Protected Harbor’s solutions offer a comprehensive, Omnichannel data security approach.

Our Commitment at Protected Harbor

At Protected Harbor, we have always emphasized the security of our clients. As a leading IT Managed Service Provider (MSP) and cybersecurity company, we understand the critical need for proactive measures and cutting-edge solutions to safeguard against ever-evolving threats. Our comprehensive approach includes:

- Advanced Threat Detection: Utilizing state-of-the-art monitoring tools to detect and neutralize threats before they can cause damage.

- Incident Response Planning: Developing and implementing robust incident response plans to ensure rapid and effective action in the event of a breach.

- Continuous Education and Training: Providing regular cybersecurity training and updates to ensure our clients are always prepared.

- Tailored Security Solutions: Customizing our services to meet the unique needs of each client, ensuring optimal protection and peace of mind.

Don’t wait until it’s too late. Ensure your organization’s cybersecurity is up to the task of protecting your valuable data. Contact Protected Harbor today to learn more about how our expertise can help secure your business against the ever-present threat of cyber-attacks.

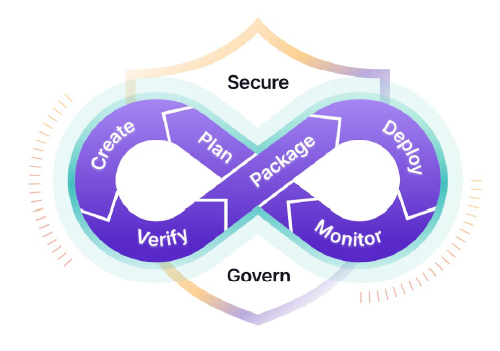

Evolution of DevOps

Evolution of DevOps

5. Data Governance and Security: Portable queries enhance data governance and security by enforcing consistent access controls, data lineage, and auditing mechanisms across diverse data platforms. Organizations can define and enforce fine-grained access policies, ensuring that only authorized users have access to sensitive data, regardless of where it resides. Furthermore, portable queries enable organizations to maintain a centralized view of data usage, lineage, and compliance, simplifying regulatory compliance efforts.

5. Data Governance and Security: Portable queries enhance data governance and security by enforcing consistent access controls, data lineage, and auditing mechanisms across diverse data platforms. Organizations can define and enforce fine-grained access policies, ensuring that only authorized users have access to sensitive data, regardless of where it resides. Furthermore, portable queries enable organizations to maintain a centralized view of data usage, lineage, and compliance, simplifying regulatory compliance efforts.

Load Balancing: Load balancing distributes incoming requests for data across multiple servers or nodes to ensure optimal performance and availability. For example, a web application deployed across multiple servers may use a load balancer to distribute incoming traffic among the servers evenly. If one server becomes overloaded or unavailable, the load balancer redirects traffic to the remaining servers, ensuring that the application remains accessible and responsive. Load balancing is crucial in preventing overload situations that could lead to downtime or degraded performance.

Load Balancing: Load balancing distributes incoming requests for data across multiple servers or nodes to ensure optimal performance and availability. For example, a web application deployed across multiple servers may use a load balancer to distribute incoming traffic among the servers evenly. If one server becomes overloaded or unavailable, the load balancer redirects traffic to the remaining servers, ensuring that the application remains accessible and responsive. Load balancing is crucial in preventing overload situations that could lead to downtime or degraded performance.

Future Trends and Challenges in AI TRiSM

Future Trends and Challenges in AI TRiSM

The certification for SOC 2 comes from an independent auditing procedure that ensures IT service providers securely manage data to protect the interests of an organization and the privacy of its clients. For security-conscious businesses, SOC 2 compliance is a minimal requirement when considering a Software as a Service (SaaS) provider. Developed by the American Institute of CPAs (AICPA), SOC 2 defines criteria for managing customer data based on five “trust service principles” – security, availability, processing integrity, confidentiality, and privacy.

The certification for SOC 2 comes from an independent auditing procedure that ensures IT service providers securely manage data to protect the interests of an organization and the privacy of its clients. For security-conscious businesses, SOC 2 compliance is a minimal requirement when considering a Software as a Service (SaaS) provider. Developed by the American Institute of CPAs (AICPA), SOC 2 defines criteria for managing customer data based on five “trust service principles” – security, availability, processing integrity, confidentiality, and privacy.

However, during the outage, users encountered various issues such as being logged out of their Facebook accounts and experiencing problems refreshing their Instagram feeds. Additionally, Threads, an app developed by Meta, experienced a complete shutdown, displaying error messages upon launch.

However, during the outage, users encountered various issues such as being logged out of their Facebook accounts and experiencing problems refreshing their Instagram feeds. Additionally, Threads, an app developed by Meta, experienced a complete shutdown, displaying error messages upon launch.

4. Quantum Computing’s Quantum Leap

4. Quantum Computing’s Quantum Leap