What are DaaS providers?

What are DaaS providers?

DaaS is short for Desktop as a Service. It’s a cloud-based computing solution that gives you access to your desktop and remote working via the internet, regardless of where you are. As a result, third-party hosts provide one sort of desktop virtualization. A virtual desktop or hosted desktop service is another name for DaaS.

DaaS Providers

If you’re diving into cloud services to deliver your applications, a growing proportion of these apps may be hosted in the cloud. When your application needs storage, networking, and computing resources, you can host it yourself or with a service provider. But you might want to consider a third option: a DaaS provider.

DaaS providers allow on-demand access to infrastructure and app environments from a single provider, with lower costs than buying your own servers. They also provide services like load balancing, high availability, and disaster recovery if needed. In basic terms, DaaS service providers are organizations that provide desktop virtualization services as per your needs.

Why should you consider using a DaaS provider?

Data centers are a necessity in today’s digital world. But, with so many options and daas offerings, and different features, choosing the right one can be overwhelming. However, it is not hard to find the right one once you know what you want.

It can offer increased security for your managed desktop, servers and ensure your business continuity is never compromised. They can provide you with multi-factor authentication, 24/7 support, and the facilities to install a disaster recovery plan on-site. Many data centers have built-in backup power systems to keep your network running smoothly at any time of day.

Desktop as a service (DaaS) providers offer a wide range of hosted desktop solutions. Many can provide turnkey virtual desktop infrastructure (VDI) implementations that support multiple users, but some also offer single-user desktops. Some providers offer additional services and management options, while others provide only essential software.

There are many reasons to consider using a DaaS provider:

- They can allow IT to focus on more strategic projects by taking over day-to-day tasks such as application and OS updates and patches.

- They can simplify the deployment of new desktops by reducing the need for manual configuration.

- They can reduce hardware costs through thin clients or zero clients.

- They can enable BYOD policies by allowing users to access their desktops from any device with an internet connection.

What are some of the benefits of using a DaaS provider?

There are numerous benefits of daas, making it an ideal solution for businesses. By adopting DaaS offerings and cloud desktop services, companies can enjoy improved scalability, enhanced data security, and simplified IT management. With DaaS solutions, businesses can seamlessly provide their workforce with flexible and secure desktop environments, reducing operational overhead and ensuring remote accessibility across various devices.

The most obvious benefit of a DaaS provider is the flexibility it allows your business. This can be particularly advantageous if you need to hire new staff quickly. You can add more desktops and operating systems whenever needed and remove them at short notice.

When you use a DaaS solution, you only pay for what you use, so there’s no need to worry about capital expenditure or over-provisioning.

The fact that desktops and operating systems are hosted offsite and accessed over the internet makes it easy for employees to work from anywhere — a definite plus in an era when remote working and cloud computing is becoming increasingly common.

Another benefit of DaaS solutions such as citrix virtual apps is that they’re easy for IT teams to manage, as the provider does all the work. The only maintenance required on your part is to keep client machines up to date and running smoothly.

Setting up a desktop virtualization solution using traditional methods can be expensive, so you may save money by using a service DaaS provider instead.

Who are the big players in the market?

Stability, security, mobility, and multi-factor authentication are all features to look for in a DaaS service provider. The following is a list of the Top Desktop as a Service (DaaS) providers in 2021:

- Amazon Web Services – workspaces

- Microsoft Azure – Windows Virtual Desktop

- Protected Harbor Desktop

- Citrix Managed Desktops

- VMware Horizon Cloud

- Cloudalize- DaaS

How to choose the best desktop as a service solution

Choosing the right managed desktop solution can be difficult. First, you should assess your business needs when deciding on the right DaaS platform. Consider whether you’re looking for a secure virtual desktop infrastructure vdi solution or need help with end-user support and remote working. Second, look into the solution’s scalability and ensure it fits your current and future IT requirements.

Finally, research the DaaS platform provider’s pricing structure and customer service to ensure that you get the best value for your budget. With this in mind, you should have no trouble finding the perfect desktop-as-a-service solution for your business so that you can leverage all benefits of daas.

Conclusion

Any of the players named above will not let you down. All of them are excellent DaaS providers. Ultimately, it comes down to which cloud services best satisfies your needs while focusing on the cost savings.

When you’re short on time and need to enable a vast workforce, it’s challenging to examine every DaaS service provider access and make an informed decision.

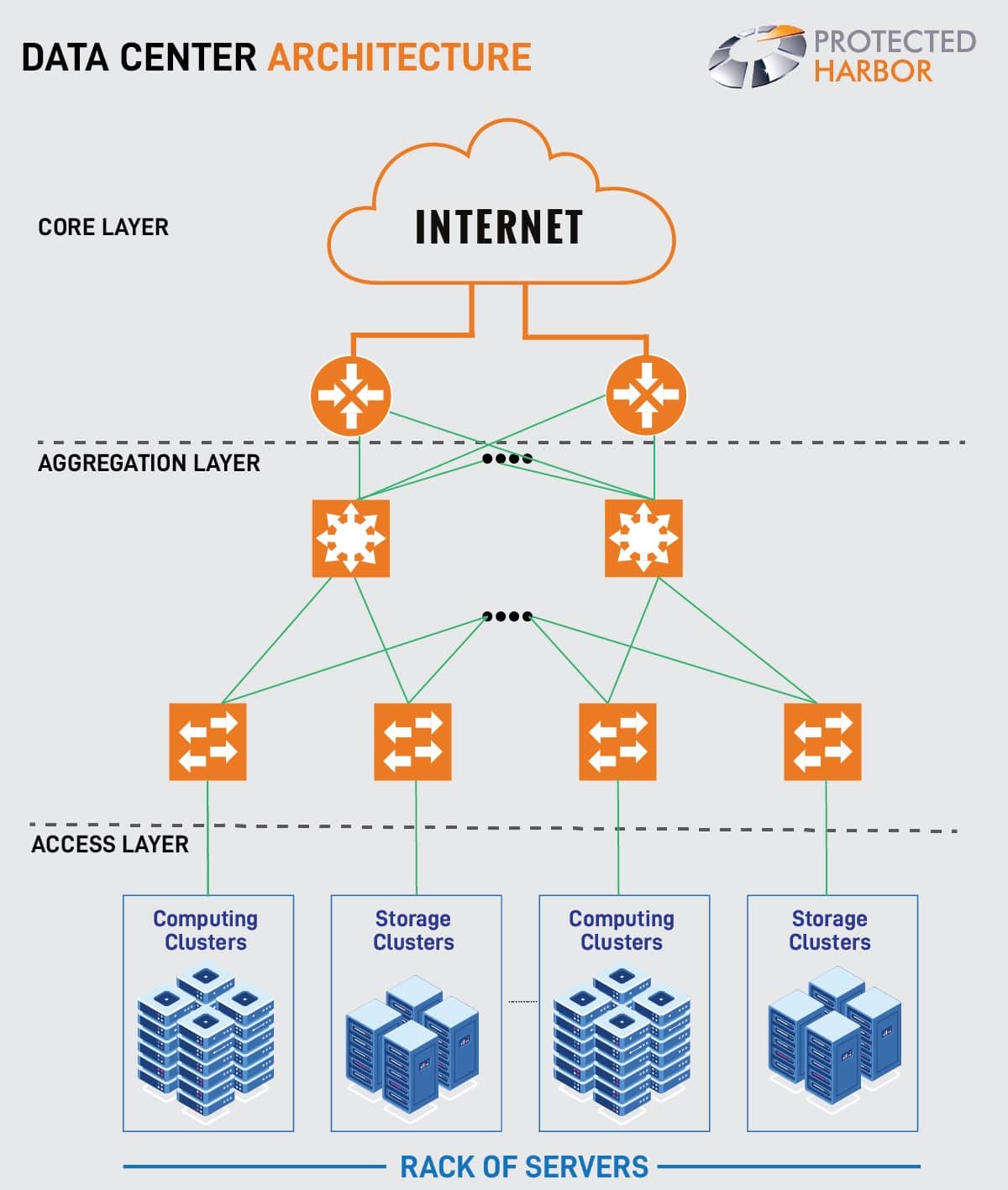

We leverage a unified data center in a DaaS solution like Protected Harbor Desktop to deliver desktop virtualization services to end-users over the internet, on their preferred device, and at their preferred time, and regular snapshots and incremental backups keep your essential data safe.

Protected Desktop is a cloud-based virtual desktop that provides a wholly virtualized Windows environment. Your company will incorporate highly secure with integrated multi-factor authentication and productive applications within DaaS by utilizing one of the most recent operating systems (OS). With our on-demand recovery strategy, we monitor your applications for a warning indication that may require proactive action.

Protected Harbor alleviates the problems that come with traditional, legacy IT systems. Another significant benefit of our high-quality DaaS solution is that it allows you to extend the life of your endpoint devices that would otherwise be obsolete. Set up your desktop; click.