5 Ways to Increase your Data Center Uptime

5 Ways to Increase your Data Center Uptime

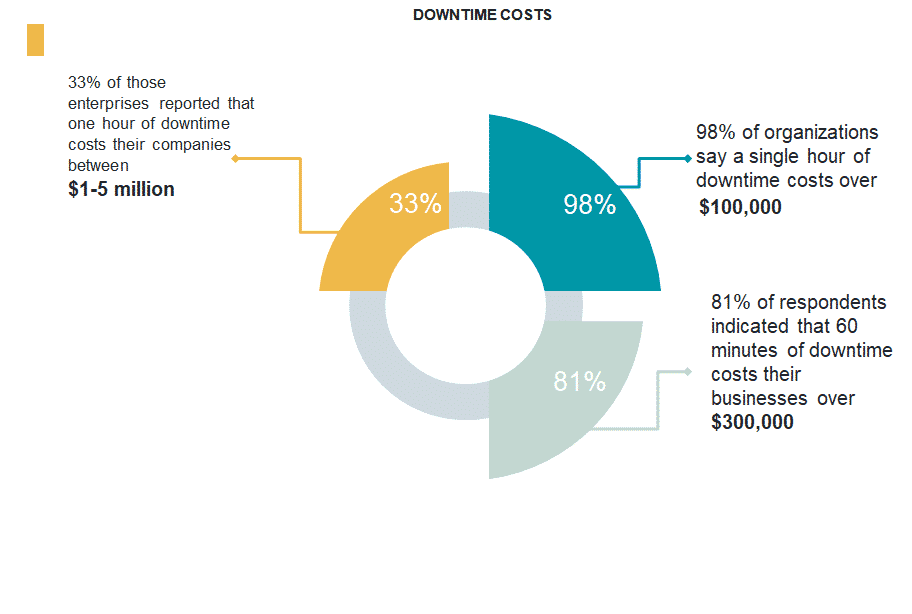

A data center will not survive unless it can deliver an uptime of 99.9999%. Most of the customers are choosing the data center option to avoid any unexpected outage for them. Even a few seconds of downtime can have a huge impact on some customers. To avoid such types of issues there are several effective ways to increase data center uptime.

-

Eliminate single points of failure

Always use HA for Hardware (Routers, Switches, Servers, power, DNS, and ISP) and also setup HA for applications. If any one of the hardware devices or application fails, we can easily move to a second server or hardware so we can avoid any unexpected downtime.

-

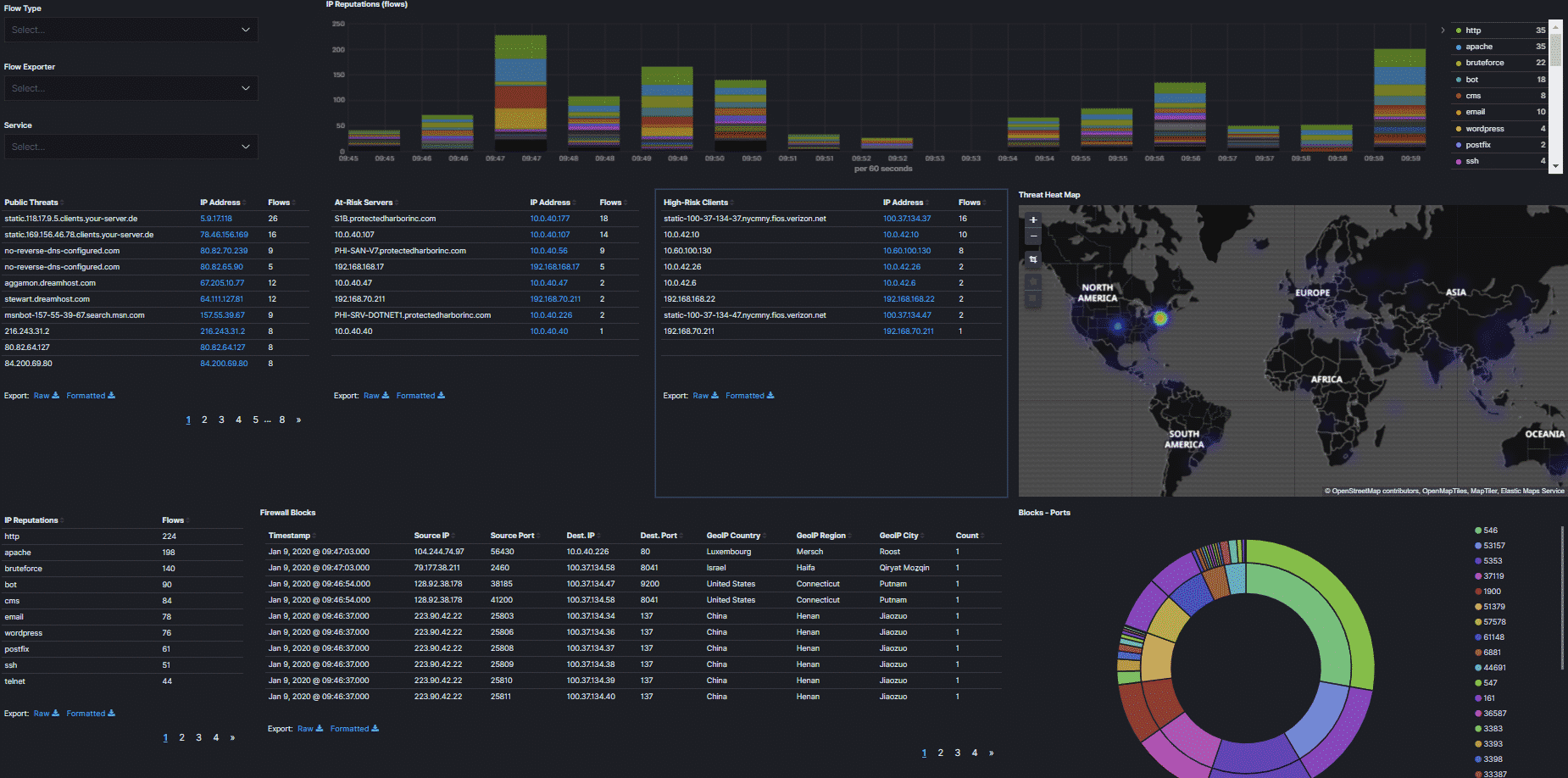

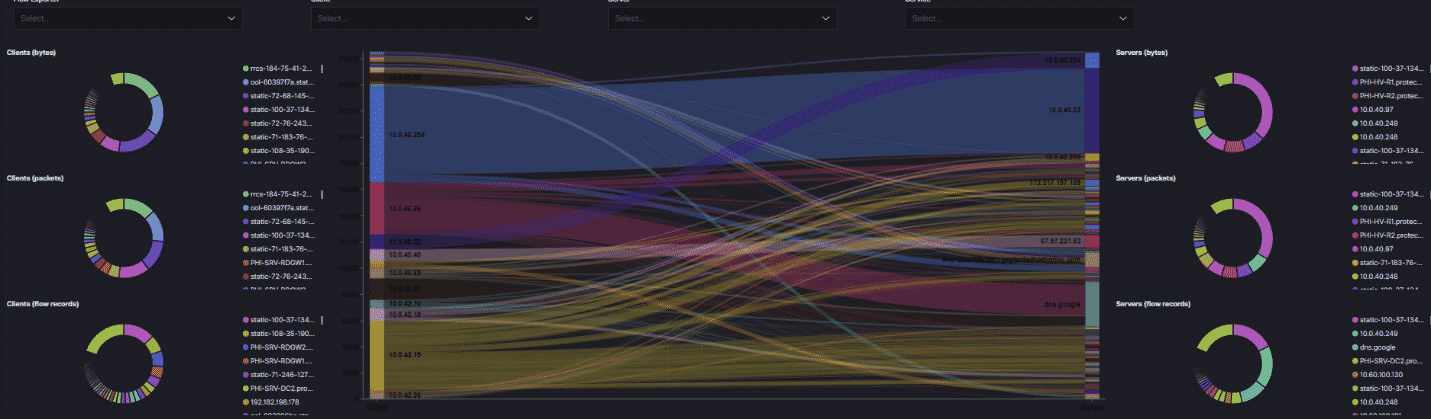

Monitoring

The effective monitoring system will provide the status of each system and if anything goes wrong, we can easily failover to the second pair and then we can investigate faulty devices. This way datacenter Admin will be able to find any issues before the end-user report.

-

Updating and maintenance

Keep all systems up to date and keep maintenance for all your device to avoid any security breach in the operating system. Also, keep your applications up to date. Planned maintenance is better than any unexpected downtime. Also, test all applications in a test lab to avoid any application-related issues before implementing them in the production environment.

-

Ensure Automatic Failover

Automatic failover will always help any human errors like if we miss any notification in the monitoring system and that caused one of our application crash. Then if we have automatic failover, it will automatically move to available servers. Therefore, end-user will not notice any downtime for their end.

-

Provide Excellent Support

Always we need to take care of our customers well. We need to be available 24/7 to help customers. We need to provide solutions faster and quick way so customers will not lose their valuable time spending with IT-related stuff.