Outages and Downtime; Is it a big deal?

Outages and Downtime; Is it a big deal?

Outages and Downtime; Is it a big deal?

Downtime and outages are costly affairs for any company. According to research and industry survey by Gartner, as much as $300000 per hour the industry loses on an average. It is a high priority for a business owner to safeguard your online presence from unexpected outages. Imagine how your clients feel when they visit your website only to find an “Error: website down” or “Server error” message. Or half your office is unable to log in and work.

You may think that some downtime once in a while wouldn’t do much harm to your business. But let me tell you, it’s a big deal.

Downtime and outages are hostile to your business

Whether you’re a large company or a small business, IT outages can cost you exorbitantly. With time, more businesses are becoming dependent on technology and cloud infrastructure. Also, the customer’s expectations are increasing, which means if your system is down and they can’t reach you, they will move elsewhere. Since every customer is valuable, you don’t want to lose them due to an outage. Outages and downtime affect your business in many underlying ways.

Hampers Brand Image

While all the ways outages impact your business, this is the worst and affects you in the long run. It completely demolishes a business structure that took a while to build. For example, suppose a customer regularly experiences outages that make using the services and products. In that case, they will switch to another company and share their negative experiences with others on social platforms. Poor word of mouth may push away potential customers, and your business’s reputation takes a hit.

Loss of productivity and business opportunities

If your servers crash or IT infrastructure is down, productivity and profits follow. Employees and other parties are left stranded without the resources to complete their work. Network outages can bring down the overall productivity, which we call a domino effect. This disrupts the supply chain, which multiplies the impact of downtime. For example, a recent outage of AWS (Amazon Web Services) affected millions of people, their supply chain, and delivery of products and services across all of their platforms and third-party companies sharing the same platform.

For the companies who depend on online sales, server outage and downtime is a nightmare. Any loss of networking means customers won’t have access to your products or services online. It will lead to fewer customers and lesser revenues. It is a best-case scenario if the outage is resolved quickly, but imagine if the downtime persists for hours or days and affects a significant number of online customers. A broken sales funnel discourages customers from doing business with you again. There the effects of outages can be disastrous.

So how do you prevent system outages?

Downtime and outages are directly related to the server and IT infrastructure capabilities. It can be simplified into Anticipation, Monitoring, and Response. To cover these aspects, we created a full-proof strategy that is AOA (Application Outage Avoidance), or in simpler words, we also call it Always on Availability. In AOA, we set up several things to prevent and tackle outages.

- First of which is to anticipate and be proactive. We prepare in advance for possible scenarios and keep them in check.

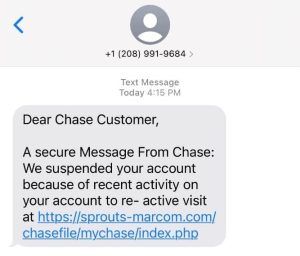

- The second thing is in-depth monitoring of the servers. We don’t just check if a server is up or down- we look at RAM, CPU, disk performance, application performance metrics such as page life expectancy inside of SQL. Then we tie the antivirus directly into our monitoring system. If Windows Defender detects an infected file, it triggers an alert in our monitoring system so we can respond within 5 minutes and quarantine/cleans the infected file.

- The final big piece of this is geo-blocking and blacklisting. Our edge firewalls block entire countries and block bad IPs by reading and updating public IP blacklists every 4 hours to keep up with the latest known attacks. We use a windows failover cluster which eliminates a single point of failure. For example, the client will remain online if a host goes down.

- Other features include- Ransomware, Viruses and Phishing attack protection, complete IT support, and a private cloud backup which has led to us achieving a 99.99% uptime for our clients.

These features are implemented into Protected Harbor’s systems and solutions to enable an optimum level of control and advanced safety and security. IT outages can be frustrating, but actively listen to clients to build a structure to support your business and workflow – achieving a perfect mix of IT infrastructure and business operations.

Visit Protected Harbor to end outages and downtime once and for all.